Report of the NEC voice and sound analysis lab

Best Practices for High Quality, Technology-Enabled, Applied Music Teaching

current update 28 June 2020

by Ian Howell

Abstract

As the music education community looks toward and beyond the fall of 2020, at best we can attempt to mitigate the uncertain safety of making music with others in real spaces. This requires a multi solution approach—including asynchronous, laggy, and lagless audio and video—even within a single institution, studio, or classroom. Demands will certainly change in reaction to unfolding circumstances beyond the control of any one student, teacher, administrator, or institution; however, the basic principle of providing an excellent quality of education must be upheld. Now is the time to study emerging options, learn how to optimize them, and disseminate this information in a manner that allows for both easy application and rapid pivots. This essay lays out a broad context for considering technology enabled solutions to the challenges of applied music lessons within academia, but many of the solutions explored will be useful in other contexts. Specific use cases and apps are suggested to cover a wide variety of situations and needs.

Contents

Introduction

Technical and infrastructure challenges

Inclusion criteria

Defining some terms

Optimizing your setup

Ethernet

Router settings

Headphones

Recommended audio and video solutions

Laggy audio

Laggy video

Lagless audio

Current solutions

Pros of SoundJack

Cons of SoundJack

Next steps

Combining audio and video

Use cases

Conclusions

Disclosures and credits

Introduction

Since March of 2020, circumstances have dictated that technology will play a structural role in the near term survival of both the music industry and the academy. Institutions that embrace technology to both facilitate continuity of musical training for acoustic performing spaces, and also to expand into training for previously underexplored virtual spaces have a chance of surviving the Covid19 era. Those that fail to innovate may not.

It is challenging to imagine a successful school of music in 2025 that has not been transformed in some way by the technological adaptation this moment requires. To survive the Covid era and thrive in the future, schools must not only provide excellent replacements for temporarily forbidden activities, we must also train students now for the technology-integrated future that will define music making in the post-Covid era. This could be as complex as staging a real-time, multi-channel, multi-location livestream event or as simple as understanding how to light a subject and compress a video. None of this replaces the need for pedagogical excellence or the transfer of artistry from one generation to the next. Our industry, already heavily reliant on video technology for auditions and online samples, is poised to reimagine how it may use technology toward artistic ends. Most of us, students and teachers alike, do not currently understand how microphones work. There is much to do.

This essay aims to identify challenges and offer best practices solutions. Some of these solutions are task specific, e.g. this platform vs that. Others are broadly applicable if they help, e.g. modifying your home router to prioritize your computer connection improves the quality of any platform you choose. All solutions sit somewhere in the complex relationship between resources—financial, logistical, human expertise, and time—and audio, latency, and video quality. Nothing is perfect. There is only the right solution for your particular resources. My hope is that you will step out of your current routine to try some of these suggestions, whether you have been doing the same thing for twenty years or you started teaching online in March.

Technical and infrastructure challenges

The technological needs and challenges for a music school in the 2020-21 school year are complex. Many schools will lack even the basic network infrastructure to carry the needed on-campus data traffic. Many lack the financial resources to equip students and faculty with computers, let alone a high quality audio signal chain. Many are situated in parts of the country without a robust broadband infrastructure. These challenges are significant.

Some of the solutions presented here are possible only if these infrastructure challenges have been solved or are solvable by degrees. While any given combination of technologies can always be optimized, unfortunately, the regional inequality that existed in February of 2020 will dictate how institutions are able to respond to this crisis.

Music schools have many unique use cases for technology. Some overlap with general academic needs. E.g. teaching a class through a Learning Management System (LMS) will be substantially similar whether the subject is economics or music history, so long as audio and video quality meet the institution’s aesthetic standards. However, the need to provide music lessons, coachings, and ensemble rehearsals/performances reveals flaws in the most commonly used LMS and video conferencing apps (see Howell, et al., 2020). These use cases require high fidelity audio and a variety of latency (network delay) options.

The first step in ensuring the survival of a music school is to acknowledge that multiple solutions are needed. This would be true even in the best of circumstances: a return to campus in the fall of 2020 with social distancing requirements and rigorous testing in place. Given that the current socio-political environment makes it more likely that a strong second wave of Covid19 hits America this fall, it is prudent to plan for hybrid or completely technology enabled education. Different parts of the world will manage this on different time tables. To this end, I propose that schools and private teachers identify three primary approaches. They are:

Asynchronous interaction (audio, video, images, or text accessed on demand).

Synchronous laggy (delayed) audio and video

Synchronous lagless (real-time) audio and video,

Our goal must be to find optimal solutions for the multiple use cases that fall under and between each approach. If we are for excellence in acoustic music transmission, we must stand for excellence in digital music transmission as well as both a stopgap and recalibration of what it is to train 21st century musicians. As the asynchronous approach has been more thoroughly explored by the education community, this document will explore the two synchronous approaches, laggy and lagless, for lessons and chamber music.

Inclusion criteria

I have six criteria that guide the inclusion or exclusion of a platform in this recommendation. (1) It cannot be a closed system. I do not recommend locking up your scheduling, billing, interaction, recordings, etc in one place. Where others see the convenience, I see the inability to flexibly respond to and gain the benefit of new products in a rapidly changing landscape. (2) there can be no additional cost to the students. I do not think it is unreasonable for a teacher to pay a small fee for the use of a tool that took time and energy for other professionals to build. But I do not feel comfortable recommending a solution that requires the students to pay a fee or requires the teacher to somehow hide that fee in the cost of the lesson. (3) The solution must have the potential to scale well. By that I mean the solution should work well for a single teacher one on one. But it should also offer the opportunity to work for groups of people, as well as offer institutions the ability to improve the quality for much larger groups with the proper investment in tech infrastructure. (4) It must have cross platform compatibility, unless literally nothing similar on the market does. (5) The solution must aim to improve the audio, latency, and video experience, in that order. The history of remote applied music lessons is one of novel solutions to overcome technological shortcomings. It is no ding on the brilliance of those who managed problems on more restrictive platforms to suggest that going forward we should move toward technologies that mitigate rather than accommodate these limitations. The goal of this document is to replicate the in-person experience as much as possible; for the technology to recede into the background. (6) Complexity is not automatically bad. If a more complex solution improves the quality, I will recommend we spend time learning how to use it. No one will emerge from this time unchanged. We may as well acquire new skills.

Defining some terms

Complex ideas are often best discussed with specific words and abbreviations. I know this can be a barrier to those who just want to get started, so I would encourage you to check out Dr. Dann Mitton‘s ongoing open source glossary of terms and acronyms.

Just a brief note about two specific terms that I am about to use: Peer to peer and server-based. All data that travel over networks, including the Internet, literally occupy physical space as they move. We can then discuss how any one app transfers data, which can have a significant impact on the security, quality, network load, and speed of the transfer. In this context, a peer is a specific computer. So peer to peer means data are sent directly from one computer to another. A server is a physical computer either owned or rented by a company. Server-based data transmission means the data travels from your computer to the company‘s computer before traveling to your student’s computer. Since it takes time for data to travel, server-based solutions are almost always slower than peer to peer.

Optimizing your setup

Whether we accept lag or not, the first question is how we can maximize the audio and video quality. I want to suggest that regardless of the apps used, you will benefit from doing whatever you can from this list of optimizations. E.g. If you use FaceTime on an iPhone, these optimizations will help. You may want to skip ahead to begin playing with specific programs and solutions. If you run into issues and have not explored this list of optimizations, please come back and work through this as you troubleshoot. These optimizations are:

Connect to your router via an ethernet cable

Set a static IP and optimize your router’s quality of service settings (QoS)

Wear open back headphones (and require your students do the same)

Taking each of these in turn:

Ethernet

An Ethernet connection may or may not provide a faster internet connection than WiFi, but it will provide a more stable flow of data. Think of a WiFi signal like ripples on the surface of a pond. Your ripple can be affected by other ripples, absorbed by solid objects, etc. It may take time for such interference to be buffered and sorted by your router before your data continues on to the wired internet. An Ethernet connection is much more stable, immediate, and for what it is worth, secure. You may need to purchase an adapter for your computer and a cable that you run up from your basement. If your device can accept an Ethernet cable, make it happen. It is worth it.

Router settings

While you are getting to know your router, consider modifying your router’s quality of service settings and assigning your device a static IP (Internet protocol) address. Quality of service (QoS) is a data priority scheme. At any given moment your router is deciding what data to send next. If you have many people working on the same home network, the QoS settings can have a huge impact on the quality of any given computer’s connection. Every router is different, so please start by googling “QoS settings [insert your internet service provider].” Generally speaking you will access those router settings by typing 192.168.1.1 or 10.0.0.1 into an internet browser while on your home network. Use the username and password either from your service provider or sometimes found on a sticker attached to the router.

In order to assign QoS priority to your computer, you should first assign your computer a static IP address. An IP address is a series of numbers your router uses to identify a specific device on your network. The number of addresses for any one router is limited, so routers typically assign them dynamically as devices connect. Otherwise every device that ever connected to your home network would forever hold onto its address, and you might run out. If you know you want to be able to tell your router to always exhibit a specific behavior towards a specific computer, it is best to switch the default dynamic address to a static one. That way you can simply refer to the IP address and know that the next time you plug that computer into your network, it will immediately receive the preferential behavior from the router.

Both the IP address and the QoS settings are typically found under an “advanced” tab in your router configuration page. Unfortunately every router is a little bit different, but once on the advanced tab look for the words “IP address distribution” or similar. Click through to the complete list and scroll to find your computer. You can confirm the IP address by going to the network page of your computer’s system preferences or control panel. Please note your computer will have a different IP address for the Ethernet connection and WiFi connection. They count as two separate devices. Select whichever connection you want to make static (or repeat for both), click edit, and tick the box to set it as a static IP address.

Once your IP address is static, go back to the advanced tab, find the QoS settings, and assign that static IP address to the highest possible priority. If you are unable to figure out how to do this with your router, take the time to ask a friend or call your internet service provider. This may be the single most beneficial change you make. Once you have figured it out, encourage your students to do the same.

Headphones

Many of us who started teaching online in March got used to using Zoom with a speaker. This works well, actually, but requires Zoom to use an echo cancellation algorithm. This is why one can’t talk over the other person, and we have come up with ways to communicate the desire to interject. If both parties wear open back headphones (literally the headphones allow sound from the room into your ears, which feels more natural), the teacher’s sound never reaches the student’s microphone. This breaks the loop that the echo cancellation algorithms listen for, improving the quality. You can effect the same change by muting yourself while the other party is talking, and the increase in quality may surprise you. If you and your students can both get into the habit of using open back headphones, I would like to suggest that we can leave the zoom platform entirely.

Recommended audio and video solutions

Keeping in mind that this is a dynamic area of the market, my current best practice is to use a separate solution each for audio and video. I do not think it is reasonable to expect multi directional, true real-time video in the near term, especially if participants are distributed and on their home networks. If you are on a college campus’ network, or even if you are able to set up multiple separate spaces on your own home network, the quality rises and the cost for an equivalent experience drops. If you are at all able to explore a local area network solution, I would highly recommend it.

Laggy Audio

Assuming that all parties have decided to wear headphones, and again I would recommend wired, open back headphones over ear buds, Bluetooth headphones of any kind, or closed back headphones, I recommend using the Cleanfeed web app to transfer audio. If you plan to use Cleanfeed on an iPhone or iPad, note that you must have the most current version of the iOS installed. Also know that you can only join a Cleanfeed call with an iOS device. You must start the call on a non iOS device.

Cleanfeed uses the Opus codec and allows for multi-directional audio up to 320kbps stereo and 256kbps mono. The interface is clean, the procedure for inviting people to join your calls clear, and the audio quality is like pulling wax from your ears. Most of the controls are hidden from view, but available quickly if you want to change the bit rate of any given participant, raise or lower their volume, or mute them. You can preload audio files to play, and there is a multi track recorder option (not true real-time). There is even an echo cancellation feature if one of your participants is not wearing headphones, but I personally think it degrades the audio quality below Zoom’s original sound setting.

Cleanfeed is laggy, but since it is audio only, it is less laggy than zoom. You can even watch each individual participants’ network latencies in real time.

Cleanfeed costs $22/month for educators. There is a free version with a lower maximum bitrate and fewer features. Cleanfeed works on any device that can run the chrome browser, including iPhones and iPads on the most recent version of the iOS. Source Connect Now is a free alternative with similarly high bitrates. As of June 2020, the interface is clunkier than Cleanfeed and it does not work on iPhones and iPads.

Laggy Video

While Zoom, Teams, FaceTime, and similar video conferencing platforms are widely discussed within the Voice community, I am going to suggest that we all switch over to one of several solutions based on the webRTC platform. WebRTC is an open source, cross platform communications app. It stands for web real time communication, which is a bit of a misnomer. Or perhaps aspirational. Regardless, it offers the potential to control many of the video quality settings missing in other platforms, has many of the features one would need in a teaching app, and crucially offers peer to peer video. Keeping in mind that peer to peer will always be faster than a server-based solution, using a webRTC app can noticeably cut down on lag time.

While there are many apps out there using the webRTC code, I am going to recommend you try Jitsi Meet. You use it from your browser or a phone app. The website has a thorough security statement to share with your administrators, and if your computer supports it, you can even use end to end encryption. Note this feature does not currently work on an iPhone. You may also password protect a meeting.

If you are used to using Zoom, which is based on the idea that you have a literal room with a history, it may take a little bit to get used to how Jitsi Meet works. Nothing actually exists. Which is to say there isn’t a server somewhere that is processing your data. Once you close your room, there is no record that it existed. If you reopen your room, it is a new instance with no connection to previous sessions. You make a room by either crafting a custom URL or accepting a URL randomly generated by the app. You don’t even have to have an account or sign in to anything. Although, you can certainly integrate with various calendar or organization apps.

If your student only has an iPhone or android phone, you can even keep audio within Jitsi Meet and disable the auto gain control, echo cancellation, and other audio processing by adding a block of code to the end of your url. This might sound complicated, but see use case #2 below for an example and a URL to copy for your own use.

Jitsi Meet automatically defaults to a peer to peer connection when there are only two participants on the call. This guarantees that the connection is as fast as possible. Jitsi Meet also offers multiple quality settings for the video (available on the computer version), which allows you to preserve bandwidth and further decrease latency. Remember the aim of this guide is to improve latency over video quality, and audio quality over latency. Lastly, if you teach at a college with an IT department, they could choose to run a Jitsi server locally, speeding up even multi party calls.

Jitsi Meet is free.

Lagless Audio

Lagless (really perceptually lagless—there is normal lag when making music in an acoustic space as well) consumer audio and video transmission exists, but public awareness is low, the technological challenges are neither insignificant nor cheap, and each platform requires some skill in order to optimize the quality. There are essentially four steps in the process that must work well. Each of these steps can add time to the transmission. They are:

You need an audio interface capable of quickly converting an analogue mic or instrument signal into a digital signal. We are currently testing latency and OS compatibility of a number of popular interfaces and will publish our results soon. For now, please follow this Facebook post: https://www.facebook.com/307364/posts/10102864933092914/?d=n

The computer processor must be fast enough to manage this digital signal. This means that an older computer, or one with many other apps or background processes running may not be sufficient.

The buffers and various program settings must be set effectively.

The connection to the internet must be appropriate, fast, and stable.

Each of these steps has the potential to add or shave off milliseconds of transmission time. All lagless solutions require an Ethernet cable to reach the fastest and most stable connection, headphones, sometimes modifications to the firewall, and a high speed connection.

Current solutions

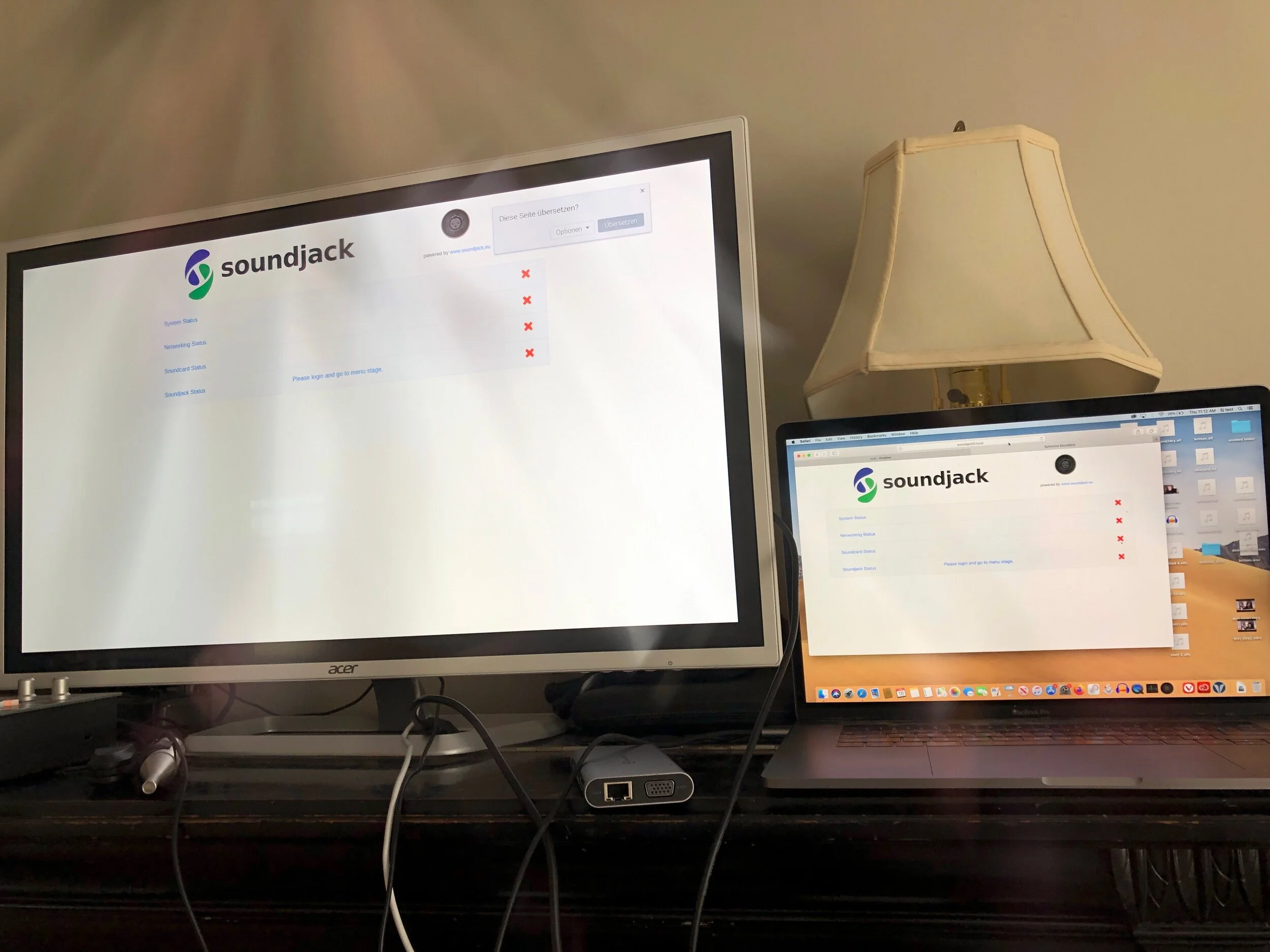

Of the currently available lagless solutions, we have settled on the SoundJack platform. It is not yet perfect and slick, but SoundJack is a robust option that can get great peer to peer results with a small amount of training. If something more comprehensive and user friendly comes along we will switch. As of June 2020 this appears to be the best solution.

In brief, SoundJack is a low latency communications app that runs on MacOS, Windows, and Linux. It allows for peer to peer direct connections of audio and some video functionality. Server based solutions are also available.

SoundJack is free and can be downloaded here.

Pros of soundjack

SoundJack is actively being developed by Dr. Alexander Carôt. He has several exciting features coming soon, and the platform is reasonably stable. As it currently functions, the feature set is robust. Video works at least one way if the sender is on a faster MacBook Pro computer. An affordable (~$230) Raspberry Pi based solution made by symonics appears viable and our lab is currently testing it. We have successfully connected up to five users for a rehearsal. When set up well, the audio quality is phenomenal and the lag unnoticeable.

Cons of soundjack

SoundJack is processor intensive and an older computer can be a limiting factor. Older MacBook Air laptops, for example, seem barely able to sustain a connection without some clicks or higher latency. While the upper number of peer to peer connections is limited only by how powerful the computer is, 4-6 is probably the most that would work with affordable computers. More than this requires either designating one higher end computer as the hub and requiring the other computers to connect and receive the “local audio + mix” audio option, or setting up a local server to do the same. The former works, but does not remove each satellite computer’s audio stream from the returned audio. Depending on the latency, this could introduce a noticeable slap back for each performer. The latter is in beta, but the developer has expressed an interest in helping people to install it.

The active development means that updates are sometimes obligatory rather than optional, which can interrupt one’s work flow if caught off guard. You are joining a community project rather than purchasing a commercial product. And there are bugs that pop up. There is somewhat of an art to setting the buffers, although it is only medium complex.

Lastly, and this is more a reality check than a con, many factors impact the success of a real-time connection. Distance and processing power are certainly relevant, as is the general data traffic congestion if you are connecting over the Internet. If your students live more than about 400 miles away, you will likely not be able to lower latency to the point that it feels like you are in the same room. SoundJack will likely still give you lower latency than any other option, but if you accept the lag, you may as well use a simpler solution like Cleanfeed. On a college campus or other local area network many of these issues evaporate.

next steps

I think it is obvious that music schools should wire up every faculty studio and classroom to use SoundJack now, even if the solution is not needed beyond the 20-21 school year. This would allow voice lessons to take place on campus or between the campus and the homes of your community members, while protecting both teachers and collaborative pianists from the singers. If you teach online lessons out of your home, make sure to schedule a technology check with each student to optimize their connection and teach them how to manage the controls. If you roll it out at your school, make sure to provide training. We will publish a review of the Symonics Raspberry Pi based Fastmusic box soon. I believe this affordable dedicated SoundJack computer will solve many issues of access in academia.

I understand the hesitance to dive into a community based low latency solution, especially if you are an administrator used to purchasing technology. However, and I write this with a sober mind aware of the potential for hyperbole, the world is on fire and this works. Music training is about making music with other people. Multi track projects and virtual choirs served an important purpose, however, we are not training most music students for careers in remote studio work.

Combining video and audio

As of June 2020, my recommendation for combining video and audio is basically the same for lagless and laggy connections. I accept that the highest quality audio available on platforms that meet my inclusion requirements means that the audio and video may be just slightly out of sync with one another. For a laggy connection, I open Jitsi Meet in one browser window and mute the audio. In a second browser window I open Cleanfeed. Adjusting the video quality in Jitsi Meet allows me to better align the audio and video. The misalignment is barely perceptible and the decreased latency over a platform like Zoom is completely worth it. There is a significant decrease in the social hesitation inherent to Zoom because of both the decreased latency and multi-directional audio.

For true lagless audio and multi-directional video, do the same using SoundJack instead of Cleanfeed, but set Jitsi Meet to the lowest video quality. This will not perfectly sync the audio and video; you can’t conduct and be perfectly in time. But it is close enough to interact organically, teach a lesson, demonstrate a gesture or fingering, etc.

For those with the resources, SoundJack on a MacBook Pro is capable of sending real-time synchronous video. For now the feature is in beta, takes an incredible amount of processor power, and starts to fall out of sync with the addition of a second video connection. You would likely not be able to broadcast video on an older computer without crashing SoundJack or introducing noticeable clicks and pops into the audio. The technology is just not there yet with true synchronous real time audio and video, at least in the consumer market.

Use cases

(1) Keep doing what you’re doing. Seriously, this has all been impossibly difficult to deal with and if you have a workflow that you’re happy with, don’t change unless you find the change beneficial. Any of the suggestions above have to be considered in relationship to your current setup. Change if changing adds value, which is always relative to your perception of the improvement in quality.

(2) Everyone has an iPad or iPhone. In this scenario, I encourage you to try out Jitsi Meet with the additional audio settings, especially if headphones can be used. At least compare this to Zoom. The lower latency of the peer to peer connection alone may be an improvement over the platform you have been using. If you were to invest in one thing, I would recommend investing in a microphone that works with an iOS device and an adapter that allows you to plug that mic and a charging cable into your iOS device simultaneously.

To access the full quality audio settings (presumes headphones, see further down for a version with echo cancellation enabled) in Jitsi Meet, paste the following into your browser:

https://meet.jit.si/[insert a series of random words that will be your unique room name that no one would accidentally also choose]#config.disableAP=true&config.disableAEC=true&config.disableNS=true&config.disableAGC=true&config.disableHPF=true&config.stereo=true&config.enableLipSync=false

That might look like a lot, but just copy it into a text file on your desktop, replacing the [ ] brackets and text with a random series of words that is unique to you. Paste it into your browser when you want to open up your room. Your students will only put in the URL up to the #.

Example:

Teacher pastes into browser: https://meet.jit.si/JoinedFromThisArticle#config.disableAP=true&config.disableAEC=true&config.disableNS=true&config.disableAGC=true&config.disableHPF=true&config.stereo=true&config.enableLipSync=false

[Update]Student pastes the same into their browser:

If you click this link, you will be taken to a real Jitsi room and get to meet other people who just clicked that link. If you are not wearing headphones you will hear echo if another participant enters.

If you look at the code, you can turn certain features on or off. For example, if you have no headphones and want to keep the echo cancellation on, the string &config.disableAEC=true can be changed to false. That would look like:

N.b. The way that Jitsi Meet handles audio is not a perfect solution, especially on an iPhone. The Jitsi Meet iPhone app in particular does not appear to respect the automatic gain control URL tags. Using Jitsi on an iPhone Browser currently appears to do a better job with this.

(3) Student has an iPhone or iPad and teacher has a computer. It is possible for the student to use Jitsi Meet for video and Cleanfeed for audio so long as the student follows this walk through by my colleague at Stetson, Chadley Ballantyne:

The basic instruction is that you need to connect to Cleanfeed first, then start the video meeting in Jitsi, restart the audio in Cleanfeed, then return to the video in Jitsi. To connect an iPhone in this way requires the following:

Double-check that your phone’s iOS is currently up to date.

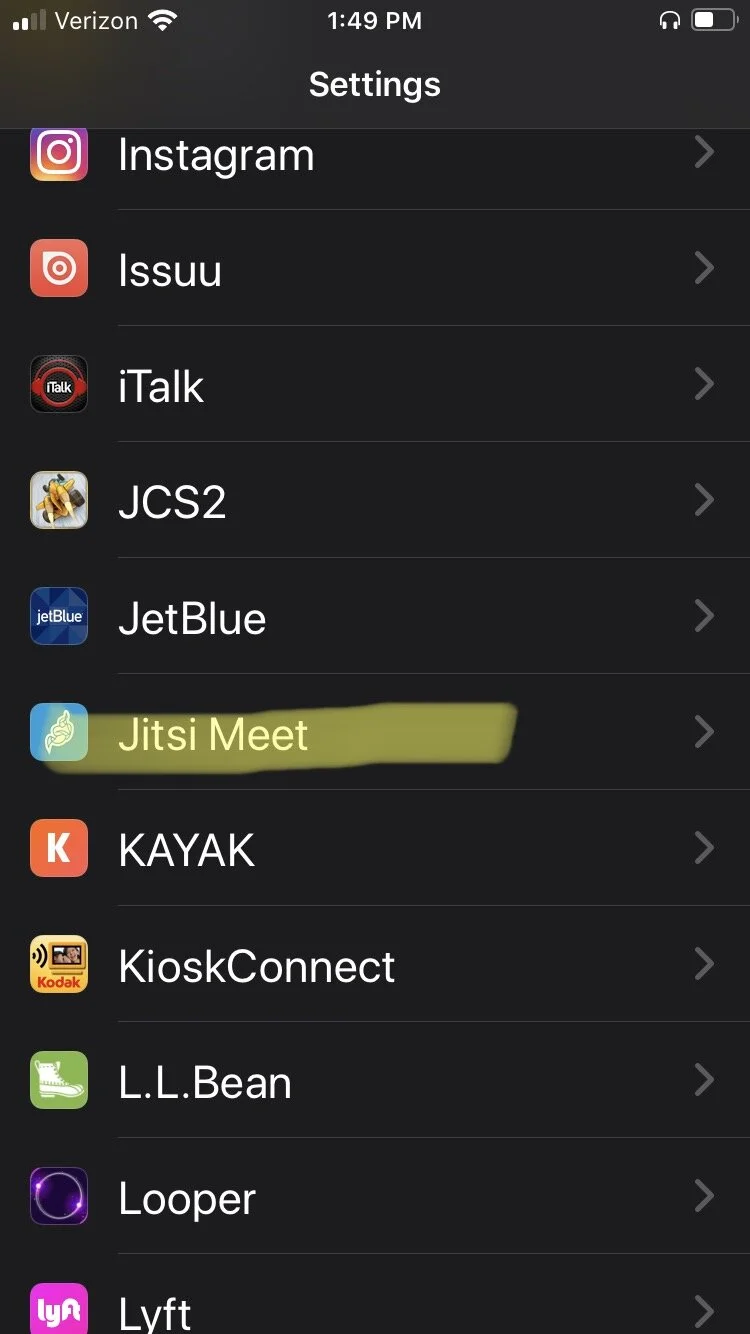

Download the Jitsi Meet app to your phone.

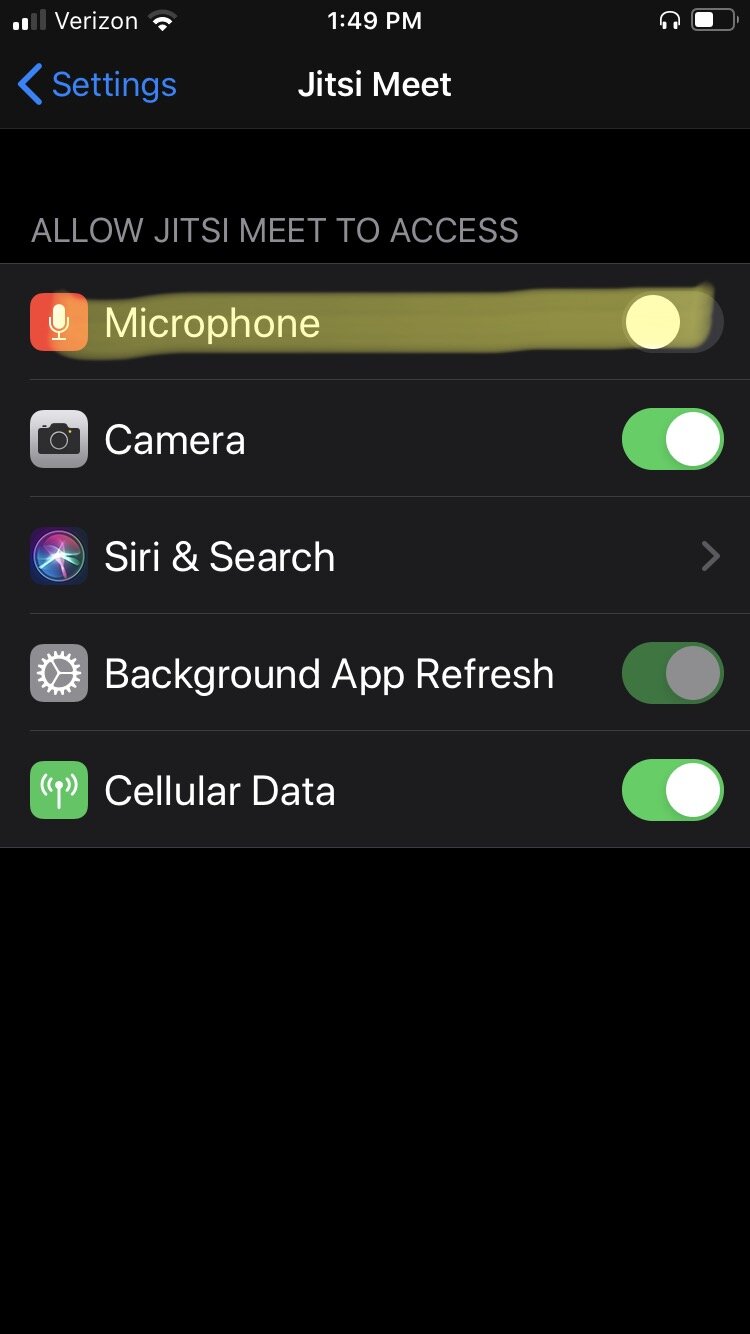

In the settings for Jitsi Meet, disable Jitsi’s access to the iPhone microphone (see images below).

You’ll need to use headphones to avoid creating an echo and to take advantage of the full audio quality of Cleanfeed.

Connect to the host’s Cleanfeed session via the session link using Safari.

While Cleanfeed only works in Chrome on a computer, it is only available in Safari on an iPhone.

Set your microphone in the Cleanfeed session to “use browser setting.”

Connect to the Jitsi meeting in the Jitsi Meet app.

The audio in Jitsi should be muted, as the microphone is disabled, but if not, mute the audio in Jitsi.

At this point, the Cleanfeed session is open in Safari and the video meeting is open in Jitsi.

However, the audio in Cleanfeed will have been interrupted when you connected to Jitsi. Go back to the Cleanfeed session in safari and unmute your microphone (by tapping on the microphone icon by your name).

You might also have to cycle the microphone selection to reestablish your audio in Cleanfeed. To do this, change the microphone to “iPhone microphone” and then back to “Use browser setting.”

Return to the Jitsi app so that you will be able to use the video feed through Jitsi and the audio through Cleanfeed.

One challenge for using this arrangement for a session lasting longer than 10 to 20 minutes is that running both Cleanfeed and Jitsi at the same time is a big drain on the iPhone battery. You will likely need to plug in your iPhone to be able to make it through an entire session. This means that you will need an adapter to connect both headphones and the power cable at the same time.

Is this complex? Yes. But this allows for crystal clear audio and fast video at no additional financial cost to the student beyond the phone they probably already own. Unfortunately the cost is time, but the payoff is likely worth it.

(4) Student and teacher each have a computer or chrome book. With this setup you can use Jitsi Meet and Cleanfeed simultaneously without any workarounds. Remember to mute the audio in Jitsi Meet. Again, to take advantage of Cleanfeed’s strengths you will need to both wear open back headphones.

(5) If both student and teacher have a computer (or a Fastmusic SoundJack Box) and a second screen (which could be a computer, tablet, iPhone, etc.) If you both have an external audio interface, and either a fast enough computer or dedicated Fastmusic box, you could use SoundJack for real time audio and a second screen for peer to peer, near real-time video. It is doubtful that you could run Jitsi Meet on the same computer running SoundJack, but using a second screen you can simultaneously run Jitsi Meet and mute the audio. Decrease the video quality to further reduce video latency. Consider setting up multiple rooms on a local area network. Purchase larger screens to display the Jitsi video while playing audio through a FastMusic box. (N.b. Our next paper will be a review and recommendations for purchasing and using the FastMusic box. At this point I would recommend purchasing one only without the packaged audio interface. As such I am not linking to this yet.)

SoundJack lagless with Jitsi 30ms latency—sufficient for collaboration

SoundJack lagless with Jitsi 30ms latency corrected for broadcast

(6) Any of the above but with more people. Cleanfeed and Jitsi Meet scale as is. When three or more participants connect to Jitsi Meet, the connection switches to a server-based solution, which will increase lag slightly. Lower video quality is still available to counter this. SoundJack will allow somewhere between 4-6 simultaneous peer to peer connections (each additional participant adds an additional connection for every participant, which taxes the CPU), depending on the processing power of the computers involved. SoundJack also offers the option to designate one computer as a hub, capable of reflecting everyone’s sound to all participants. In this setup, lower powered computers only need to make the single connection to the hub computer. Unfortunately one’s own sound is mixed into the reflected sound, so at higher latencies it can feel like your sound is echoing off a wall. At lower latencies this effect disappears.

If you are interested in going further with these solutions, and especially if you have an IT department willing to get involved, both Jitsi and SoundJack have local server-based solutions. The benefits of using a local server include lower processor load per participant without adding much latency.

(7) Mixed solutions. When considering the flexibility that online education enables, we can consider novel mixtures. For example, a pianist, singer, and teacher could all be in different rooms on the same campus. A real-time audio and minimally laggy video connection would work very well on a local area network. However, with the use of a headphone splitter, two of those participants could be in the same room. Or, those on campus could enjoy a real-time connection while those off campus could connect with a lagless or laggy solution. The Fast Music Box also streams audio on a local area network. The ability to pick and choose between a variety of tools allows us to solve these problems in new ways. The fact that mobile phones now offer high quality capabilities only expands such solutions.

Conclusions

This moment is strange. Its contours are measured as much by how music teachers and administrators think the next school year will unfold as it is by what we are currently able to safely do. Some think the fall will proceed as usual. Others have already announced that teaching will be online for the foreseeable future. Some are watching the current spike in cases across the American South and rethinking the wisdom of poorly controlled reopening plans. More research will emerge in the coming months, but given that Covid 19 cases are on the rise once again it seems prudent to plan for the worst case scenarios. Not because they will happen in all places, but because they may happen. And the nature of an exponential growth curve is that when it ramps up, it ramps up quickly. We need to own that we all did the best we could in March. But it will not be enough in September. To plan is not to concede, it is a way to keep options open. To not act now is as consequential a choice as any. Let us take the steps we can to preserve high quality music training while minimizing the needless spread of the Sars-Cov-2 virus. It is a moral imperative.

Disclosures and Credits

The author has no financial relationship with any of the companies or products mentioned herein. The NEC voice and sound analysis laboratory receives ongoing, undirected funding from the New England Conservatory of Music with gracious support from Andrea Kalyn, President, Thomas Novak, Provost, and Bradley Williams, Chair of Voice. The author wishes to thank Kayla Gautereaux (NEC), Nicholas Perna (Mississippi College), Chadley Ballantyne (Stetson), Joshua Glasner (Clarke), and Theodora Nestorova (NEC) for their ongoing discourse and assistance with explorations and experimentation.